Hyperion Docker¶

Warning

Hyperion Docker is not recommended for production environments, only for testing, debugging and local networks.

Hyperion Docker is a multi-container Docker application designed to install and run Hyperion as quickly as possible. It will index data from a development chain where you can define your contracts, execute some actions, and observe the behavior when querying the Hyperion API.

Recommended Operating System

Ubuntu 24.04

Architecture¶

Layers¶

To simplify, we divide the microservices involved with Hyperion into layers.

- Blockchain (Leap with state-history plugin)

- Hyperion (API/Indexer)

- Infrastructure (Redis, RabbitMQ, MongoDB, RedisCommander)

- Elasticsearch/Kibana (New mandatory external layer)

The first layer would be the Blockchain itself - Node Service. For Hyperion to work, we need a blockchain to consume data from. In this layer, we have a single microservice:

Leap Node

Local blockchain for data consumption

The second layer would be Hyperion itself, which is divided into 2 microservices:

Hyperion API

This service allows interaction with the indexed data.

Hyperion Indexer

As the name suggests, this service connects to the blockchain to fetch and index data.

The third layer, which we can understand as Infrastructure Services, includes 3 main microservices:

- Redis

- RabbitMQ

- MongoDB

The fourth layer (new) is composed of Elasticsearch and Kibana, which are installed separately using the official Elastic method. This change allows for greater flexibility and alignment with best practices recommended by Elastic.

Considering this structure, the Project Repository contains 3 folders representing the first three layers mentioned:

- hyperion

- infra

- nodeos

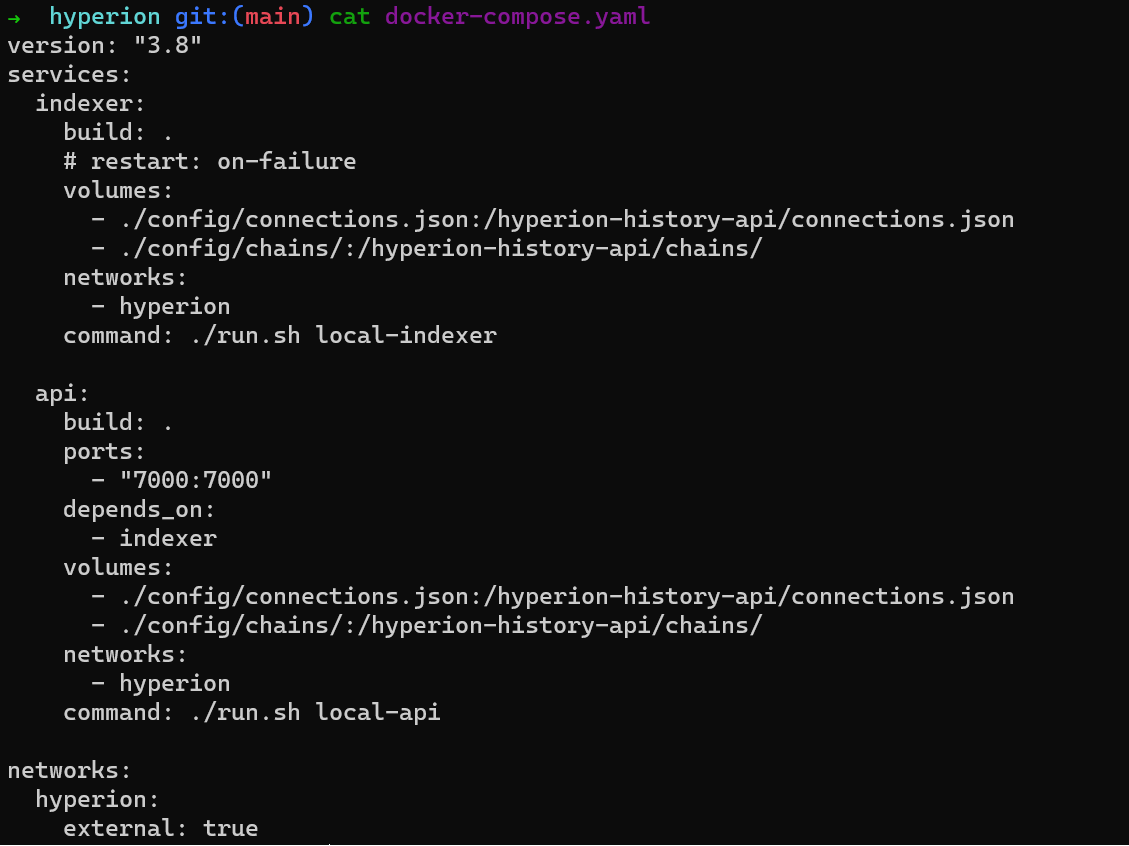

In each directory mentioned above, we added a docker-compose.yaml file responsible for starting their respective microservices.

For those who have never used docker-compose, it allows the creation of multiple containers simultaneously. These containers are declared as services.

All services (containers) declared within a docker-compose.yaml share the same network by default. Since we separated the containers into different files, we need to create a network in Docker that will be shared. The procedure will be detailed below in the configuration process.

Getting Started¶

Prerequisites¶

Ensure that Docker and Docker Compose are installed on your system.

Installing Elasticsearch and Kibana¶

Hyperion depends on Elasticsearch for indexing and searching data. Starting with this version, we recommend that the installation and management of Elasticsearch and Kibana be done using the official Elastic method, ensuring greater flexibility and ease of maintenance.

Execute the command below to install locally:

curl -fsSL https://elastic.co/start-local | sh

This command will create a folder called elastic-start-local with all the necessary files, including:

- Management scripts (

start.sh,stop.sh,uninstall.sh) .envfile with access credentials- Elastic's

docker-compose.ymlfile

After installation, start the Elasticsearch and Kibana services:

cd elastic-start-local

./start.sh

You can verify that the services are working by accessing:

- Elasticsearch: http://localhost:9200

- Kibana: http://localhost:5601

Access credentials will be available in the .env file. You will need them later to configure Hyperion.

Tip

To view the credentials in the .env file, you can use:

cat elastic-start-local/.env

Production Environments

For deployments in production environments, consult the official Elastic documentation for advanced configurations: Elastic Docs - Self-Managed

Infrastructure Layer¶

1. Clone the repository¶

Clone the repository to your local machine in the same parent directory where elastic-start-local folder was created:

# Make sure you're in the same directory where elastic-start-local was created

cd .. # If you're currently in the elastic-start-local directory

git clone https://github.com/eosrio/hyperion-docker.git

cd hyperion-docker

Directory Structure

Your directory structure should look like this:

/your_parent_directory/

├── elastic-start-local/ # Elasticsearch installation

└── hyperion-docker/ # Hyperion Docker repository

start.sh script to work correctly.

2. Verify Docker is running¶

Make sure Docker is running by executing the following command in the terminal:

docker ps

Expected result

3. Create the shared network¶

Create a network that will be shared between containers by executing the command:

docker network create hyperion

Expected result

4. Create the microservices¶

Now, let's start creating the microservices of the infrastructure layer.

Navigate to the infra directory of the repository and execute the following command:

cd infra

docker compose up -d

Flag -d

Note that we use the -d flag to run in detached mode, allowing us to continue using the command line session.

This command will create the microservices (Redis, RabbitMQ, MongoDB) needed for Hyperion to work. Note that Elasticsearch and Kibana are no longer included in this layer, as they are now managed separately.

The first time you run the command, it may take some time for everything to be configured. You can follow the execution log using the command:

docker compose logs -f

Press Ctrl+C to terminate the log reading process.

5. Verify the services¶

Check if the services are working:

- RabbitMQ - http://localhost:15672/

After completing the Infrastructure Layer configuration, we can proceed to the Leap Layer (nodeos).

Leap Layer (nodeos)¶

Navigate to the nodeos directory in the repository and execute:

cd ../nodeos

docker compose up -d

This layer was added to the repository assuming that you don't have a configured blockchain from which the Hyperion Indexer will consume data.

After the infrastructure and blockchain node are configured, we can finally start Hyperion.

Hyperion Layer¶

This layer has 2 microservices, Hyperion API and Hyperion Indexer.

Starting Hyperion services¶

To start the Hyperion services, we've created a convenient startup script that automatically handles the Elasticsearch password configuration. Navigate to the hyperion directory and execute the following command:

cd ../hyperion

./start.sh

The start.sh script performs the following operations:

- Retrieves Elasticsearch password: Automatically reads the password from the

.envfile generated during the Elasticsearch installation (using the relative path../../elastic-start-local/.env) - Updates configuration: Replaces the password placeholder in the

connections.jsonfile with the actual password - Starts services: Executes

docker compose up -dto start the Hyperion services

Directory Structure Reminder

This automatic configuration depends on the directory structure mentioned earlier, with both elastic-start-local and hyperion-docker directories at the same level.

This automated process ensures that Hyperion is properly configured to communicate with the Elasticsearch instance without manual intervention.

Alternative Manual Configuration

If you prefer to configure manually, you can edit the connections.json file in the config directory and replace "ELASTIC_PASSWORD" with the password found in the elastic-start-local/.env file, then run docker compose up -d.

Troubleshooting¶

Configuring connections.json¶

The connections.json file is crucial for the proper functioning of Hyperion as it defines how Hyperion connects to all required services. If you encounter connection issues, or if you're using a custom infrastructure setup, you may need to adjust the host configurations in this file.

Host Configuration

The default configuration assumes that:

- RabbitMQ and Redis are running as Docker services named "rabbitmq" and "redis" respectively

- Elasticsearch and MongoDB are accessible via

host.docker.internal(which resolves to the host machine from inside Docker containers)

Here's how to modify the configuration for different scenarios:

Using services outside Docker or with different names¶

{

"amqp": {

"host": "your-rabbitmq-host:5672", // Change if RabbitMQ is not running as "rabbitmq" service

// ...other AMQP settings

},

"elasticsearch": {

"host": "your-elasticsearch-host:9200", // Change if Elasticsearch is at a different location

"ingest_nodes": [

"your-elasticsearch-host:9200"

],

// ...other Elasticsearch settings

},

"redis": {

"host": "your-redis-host", // Change if Redis is not running as "redis" service

"port": "6379"

},

"mongodb": {

"host": "your-mongodb-host", // Change if MongoDB is at a different location

// ...other MongoDB settings

}

}

Common connection issues and solutions¶

- Connection refused errors: Verify that the service is running and that the hostname/port is correct

- Authentication failures: Ensure that usernames and passwords are correctly set in the configuration

- Docker networking issues: If services can't reach each other, verify they are on the same Docker network (

hyperion) - Host resolution issues: If using custom hostnames, ensure they are properly resolved (you may need to add entries to

/etc/hostsor use Docker's DNS)

Testing connections

You can test connections to each service using appropriate tools:

* For Elasticsearch: curl -u elastic:your_password http://host:9200

* For RabbitMQ: curl -u rabbitmq:rabbitmq http://host:15672/api/overview

* For Redis: Use redis-cli -h host -p 6379 ping

* For MongoDB: Use mongosh --host host --port 27017

For issues related to Elasticsearch/Kibana, consult the official Elastic documentation:

For issues related to Hyperion:

- Check if all layers are working correctly

- Check if the Elasticsearch credentials are configured correctly in the

connections.jsonfile - Check the service logs using

docker compose logs -f

Next steps¶

Feel free to modify the configurations according to your needs. All configuration files are located in hyperion/config or nodeos/leap/config.

For more details, consult the Hyperion Configuration Section .